Imagine that you wake up one morning, take a sip of your delicious coffee, and open your laptop to take a look at your site when suddenly … you see that it went offline.

Every webmaster will have to face at some point an SEO nightmare in the form of website downtime.

When your website goes down, you need to act quickly before Google starts punishing your pages one by one.

The question is: How exactly will downtime affect your SEO efforts?

In this article, we will therefore take a look at:

- What website downtime exactly is (and its possible causes)

- How does it affect SEO

- What to do during website downtime (best SEO practices)

Without further ado, let’s dive deep into this nightmarish topic.

What is website downtime?

Website downtime (or outage) is a period during which the site is completely or partially inaccessible to users due to certain technical issues.

Here’s what a regular website visitor might see during the downtime period:

Depending on the outage duration, a website that goes “down” may experience a dramatic decrease in organic traffic, ranking drops, or even removal from Google Index.

What causes the site to go “down”?

There are numerous reasons why the website may experience downtime periods – software problems, human failure, server issues, you name it.

Let’s take a quick look at a few common causes of downtime.

1. Human mistake

Let’s be honest, people make mistakes all the time.

One of the most common causes of website outage is simply a human error – whether it is implementing a malfunctioning feature, or just an accidental click of the button that can bring the whole website down.

According to the data survey done by Veriflow, 75% – 97% of site outages can be caused by human error (whether it is due to lack of training, time pressure, or simply by the amount of stress).

Although downtime caused by a human mistake might be a somehow frustrating experience, it is also (usually) the easiest problem to solve.

2. Hosting & hardware issues

Web hosting is the place where your site lives – and another common cause of website downtime.

Although you can get decent hosting service pretty easily these days (and for a reasonable price), it does not mean that it will be flawless all the time.

For example, it can easily happen that the hosting server may experience a huge (and unexpected) traffic spike that could slow down your page speed or even bring your whole website down.

3. Poor security

Unfortunately, website attacks are still on the rise and are quite a common cause of site outages.

No matter the reason, any unprotected website can be easily brought down by a malicious attack from cybercriminals – whether it is via DDoS (Distributed denial-of-service) attack, hacking, virus infliction, or any other malicious strategy.

For example, DDoS attacks can take out even the largest websites simply by overloading their servers with a tremendous amount of requests.

In fact, Norton – a popular anti-malware software company – describes DDoS attack as “one of the most powerful weapons on the internet”

Due to website attacks like these, having robust security features that can protect your site is a must – now more than ever.

4. Software incompatibility

Implementing a new theme, plugin, or any piece of new software is a regular practice that happens on websites from time to time.

Unfortunately, even a small software implementation like the installation of a WP plugin may accidentally cause a website to go offline.

Why?

Well, because of the software incompatibility – a new code might not like the code of your website 😅.

However, these kinds of outages can be easily avoided by following simple practices like:

- Reading through software documentation (and checking if it is compatible with your website).

- Visiting forums and review pages for possible problems and troubleshooting.

- Testing software before installing it on a “live version” of your site.

How does downtime affect SEO?

Depending on the timeframe, a downtime period may significantly damage your SEO efforts – it can cause a huge loss in organic traffic, ranking drops, or even the removal of the site from the Google Index.

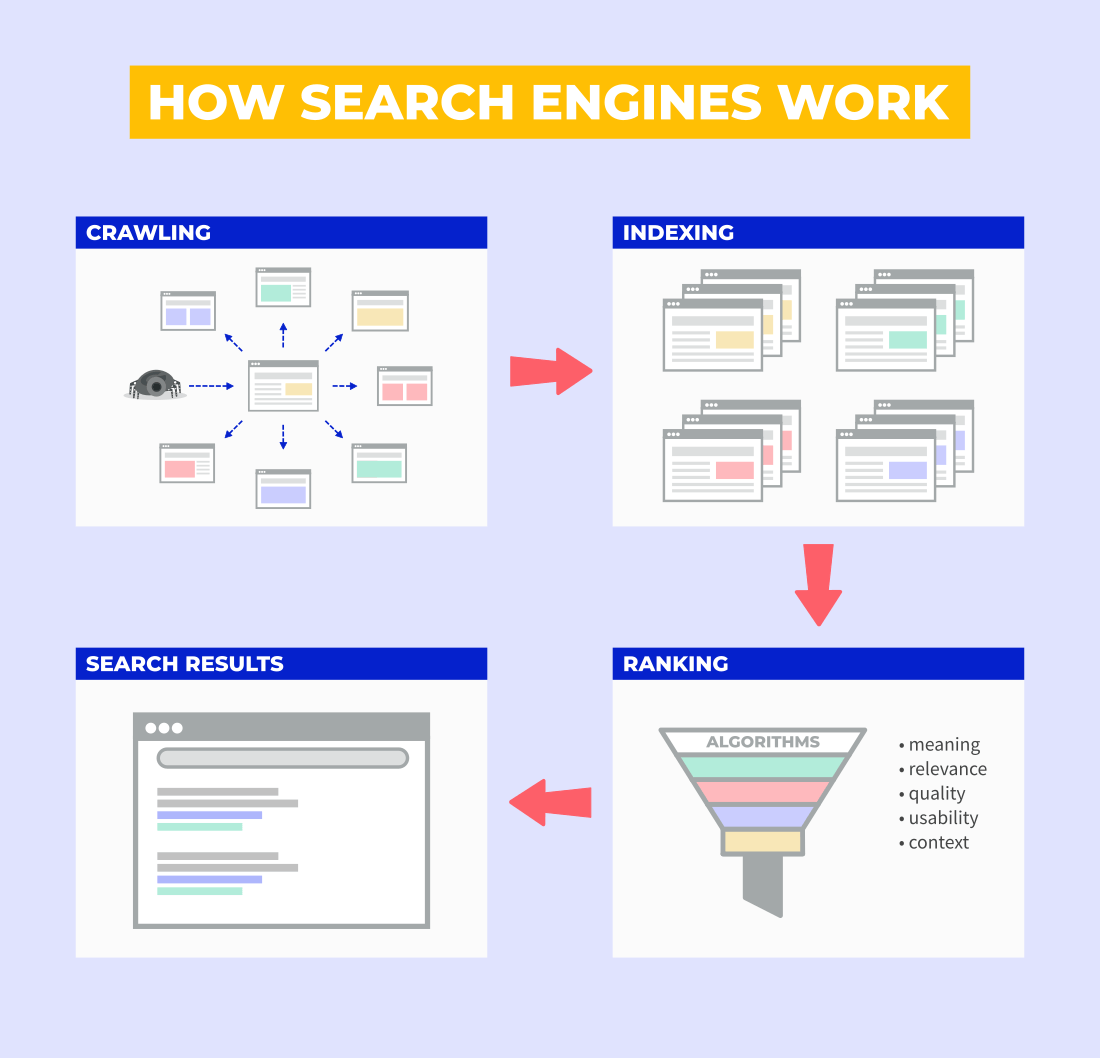

Google collects data about web pages by its crawler called Googlebot. Crawlers can visit (and revisit) any website that is available on the internet (via links, sitemaps, etc.), scan its content, and add it to the Google Index for ranking purposes:

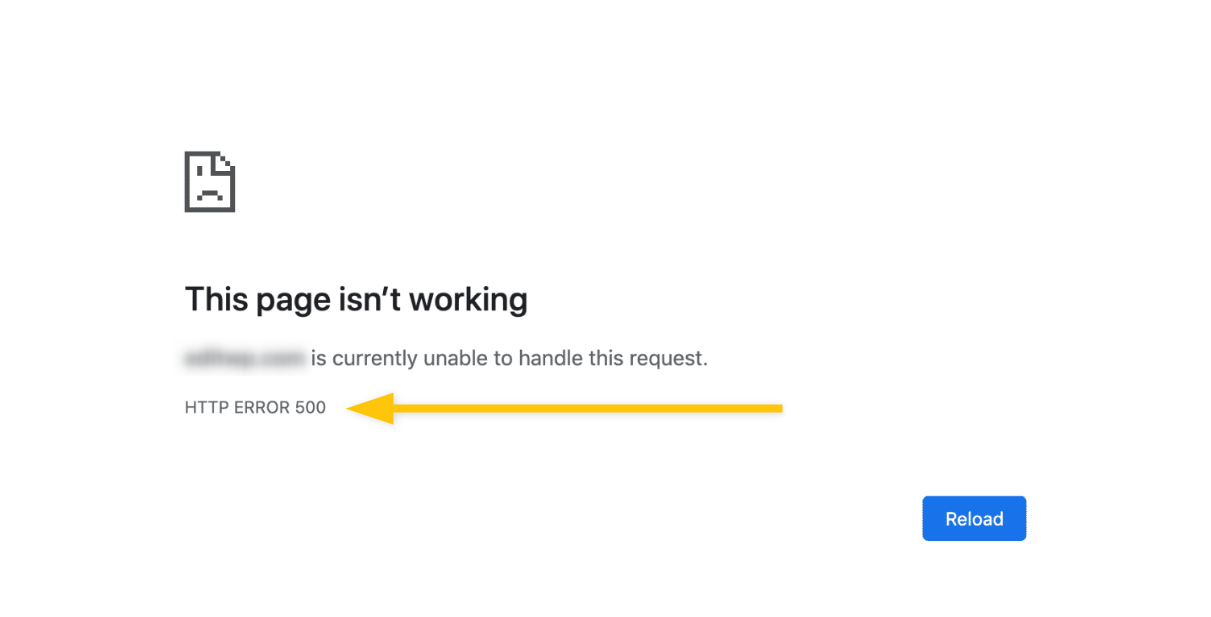

Whenever Googlebot visits a website that has an outage, it will receive an HTTP 500 status code – an error message which indicates that the website’s content is unavailable due to some server-side problem:

Although a website like this can be unavailable to crawlers (and users as well), Googlebot won’t give up that easily. It will try to revisit the site later to see if it’s back online and running.

In a scenario like this, the impact of downtime on your SEO efforts is not that significant (yet) for the first couple of hours:

“If the URL returns HTTP 5xx or the site is unreachable we’ll retry over the next day or so. Nothing will happen (no drop in indexing or ranking) until a few days have passed.” (John Mueller, Google Search Advocate).

However, if your website is experiencing a longer downtime period, Google will start de-ranking its pages and eventually drop them from its Index.

Matt Cutts (Former head of the web-spam team at Google) further explains how Google deals with websites that experience downtime:

Let’s take a quick look at what exactly happens during a longer downtime period.

a) Ranking drops

Any website that is “offline” for more than just a couple of days may start experiencing a significant drop in Google rankings.

To be clear, Google won’t punish you just for an accidental outage or planned maintenance – during the lifespan of a website, it is quite natural to go offline from time to time when you are making some significant changes to your site or simply fixing an unexpected technical issue that won’t take long:

Keep in mind though, that even Google can “lose its patience”. If the search engine won’t be able to visit your pages even after a few days, it will eventually start de-ranking them from its SERPs and (after some time) start the process of deindexation.

b) Deindexed pages

Crawling and indexing are the essential parts of SEO – if your pages are not indexed, they basically don’t exist in the eyes of Google (and its users).

That’s why longer downtime periods can be so dangerous to any website – if Google won’t see your site back online for a long period of time, it will assume that it’s not coming back and remove all your pages from Google Index:

“Completely closing a site even for just a few weeks can have negative consequences on Google’s indexing of your site.“ (Google Search Central)

In a scenario like this, getting back online and back to Google Index is basically like starting from scratch – your website will have to get properly crawled and indexed by Google all over again, build up its SEO authority from zero, and gradually improve its rankings as any new site would.

What to do during the downtime period?

Well, first of all: Don’t panic!

No matter the technical issue that caused the outage, it is just a matter of time until you (or your team) will find a solution and fix the problem.

From the SEO perspective, the most important question you should ask yourself during the downtime is:

“How long will it exactly take to get back online?”

If your site will experience a downtime period just for a couple of minutes or hours (e.g. due to maintenance), you don’t necessarily have to do anything in terms of SEO.

On the other hand, if you have no idea how long will the website outage last (or it is obvious that it will take a long time to get back online), you might consider implementing a few protective measures that can buy you some time before Google starts de-ranking and deindexing your pages.

1. Implement (HTTP) 503 status code

503 HTTP status code is an error message in the website’s header indicating that your site is temporarily unavailable due to an unexpected technical issue or maintenance.

It also serves as a message to web crawlers (like Googlebot) that basically says:

“Hey, sorry, but our site is temporarily unavailable, please come back later.”

Search engines like Google take the 503 status code into consideration as an indication that your website did not disappear from the internet for good – it is also recommended by Google representatives as a way to “buy you some time” until your website’s outage is resolved:

https://twitter.com/JohnMu/status/1359505366992756744

By implementing HTTP 503, you can prevent your website from being deindexed by Google (and preserve your rankings) for about a week or so before Google starts considering your pages as permanently unavailable.

For more information on how to create a 503 status code for your site, check out this short article.

Note: There are many types of HTTP status codes that indicate different states of the website:

- HTTP 200 – It basically means that everything is okay with your site and the content on pages should be available to users (and crawlers) without any problem.

- HTTP 300 – 3xx status codes serve as referral messages. They are used for temporally or permanent redirects when pages are moved to different locations (URLs).

- HTTP 400 – These types of status codes indicate that there might be some issue on the client side (e.g. HTTP 404 indicates that the page could not be displayed because it was deleted or simply due to mistyped URL).

- HTTP 500 – It indicates a server-side problem due to which the website is on downtime.

If you would like to know more about individual HTTP status codes, their meaning, and usage, check out this official documentation.

2. Create a maintenance page

The maintenance page (or a static page) is a temporary placeholder that can be displayed instead of a blank error page during unexpected downtime or throughout the maintenance period.

It can inform visitors that the web page is temporarily unavailable and navigate them to a different location:

Maintenance pages can give you some control over the incoming organic traffic – you can tell website visitors to be patient and return to your site later, or provide links to other pages that they might find useful.

Creating a static page is not rocket science (though it requires a bit of experience) – for more information, check out this fast and easy guide for maintenance pages.

3. Reindex and optimize pages (after downtime)

Fixing technical issues on the website and getting back online is not the end of your problems – depending on the length of the downtime period, the SEO of your site could have already been negatively impacted by the Google algorithm.

In this case, it is always a good practice to check what exactly happened to your site while it was offline – whether you lost some of the rankings for your important keywords, or if your pages were deindexed by Google.

Quick tip: If you are using a rank-tracking tool like SERPWatcher, you can quickly check whether or not you have lost some of the ranking positions for your important keywords.

In SERPWatcher, you can simply open your list of keywords that you are tracking → select a specific timeframe (e.g. for the past 7 days) and see whether or not there was a significant ranking drop for your pages:

This should give you a very general overview of your ranking positions after the website downtime.

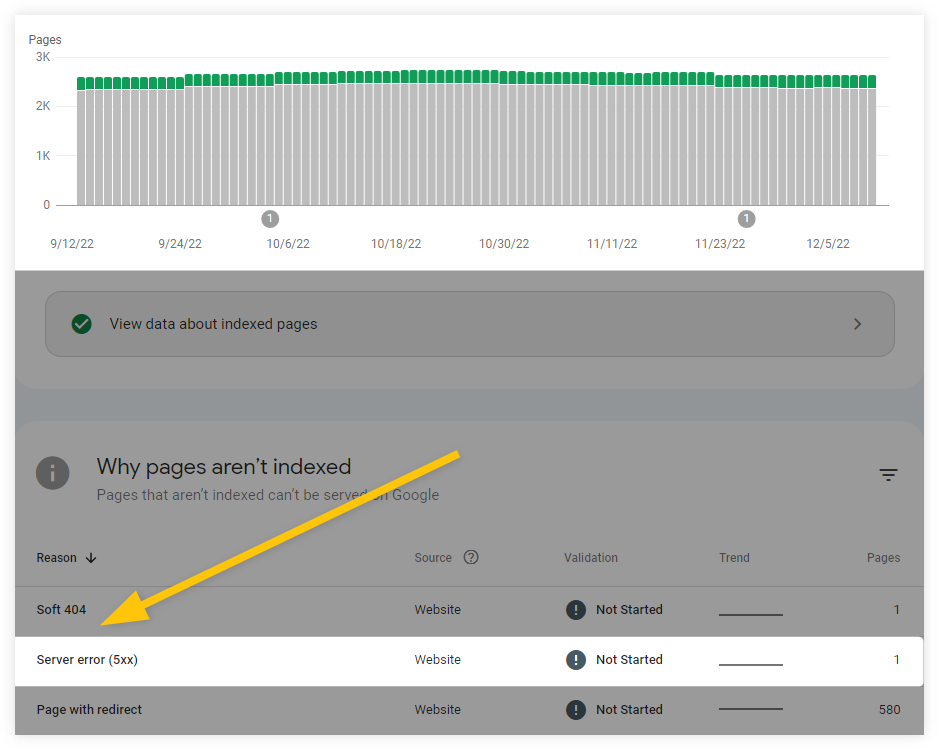

To check whether (or not) your website suffered from deindexation, head over to the Google Search Console → Pages and analyze pages that are (or aren’t) currently indexed due to Server error (5xx):

This should give you a general idea about the state of your website and help you to find out whether your important pages are still properly indexed.

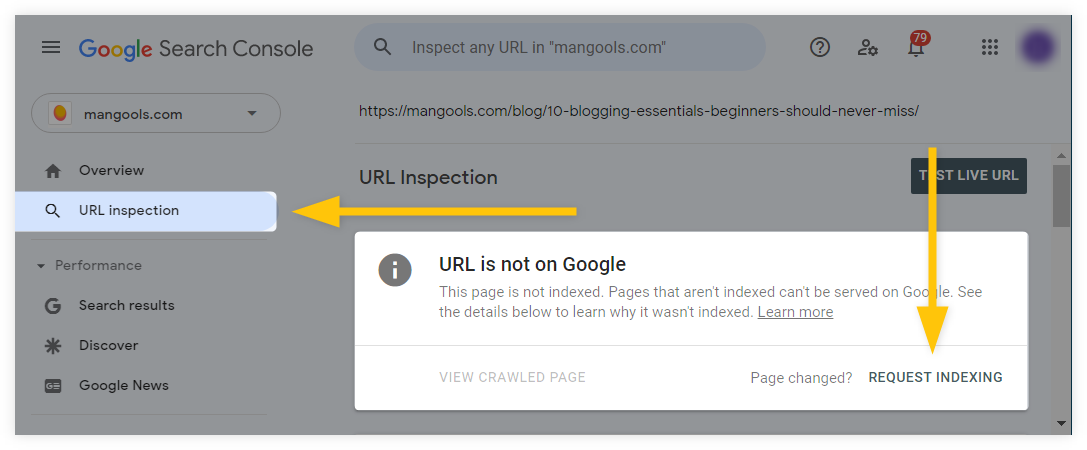

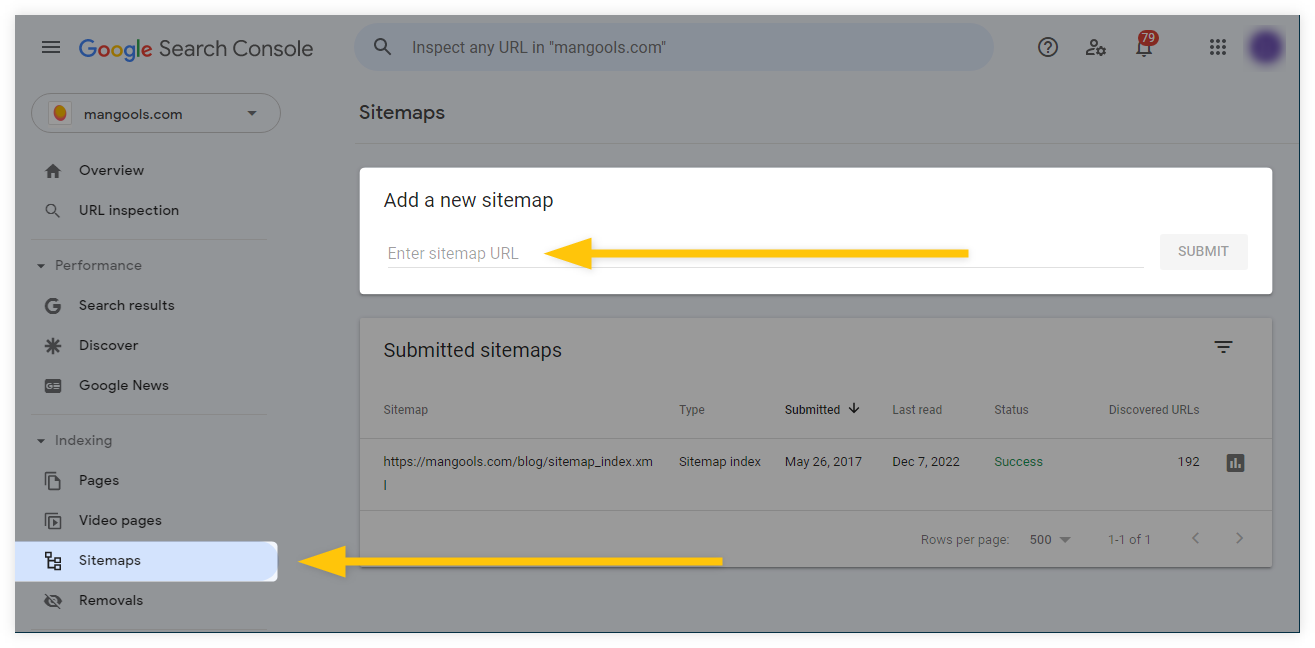

If some of your important pages were removed from Google Index, you can ask Googlebot to recrawl your whole website (or just individual pages):

1. Use URL Inspection Tool to manually request indexing of a page (recommended only for a few URLs):

2. Submit a sitemap for a large number of URLs (or for the whole website) that were removed from Google Index:

For more information about how to recrawl (and reindex) your URLs, check out the official documentation by Google Search Central.

Keep in mind, though, that reindexing itself won’t get your pages back to the top of the SERPs – in order to improve your Google rankings, you need to boost your technical SEO, provide a great user experience as well as have high-quality content on your website.

Or as John Mueller officially stated:

“Do not assume that a drop in rankings after a temporary drop in indexing will fix itself — that’s something you need to address, not something to wait out.”

There are many ways how you can improve your pages after the downtime period and boost your SEO, such as:

- Improve page speed

- Provide XML sitemap

- Optimize images

- Boost your on-page SEO

- Create high-quality content

- … and many many more.

How to be prepared for website downtime (best practices)

a) Utilize monitoring service

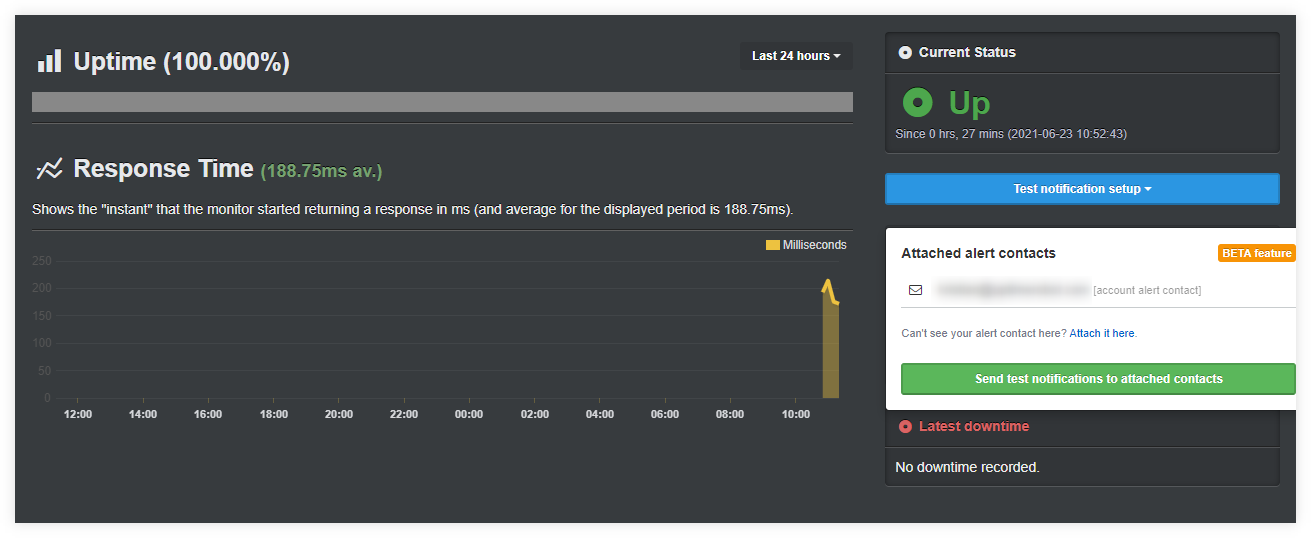

Website monitoring service is like a “warden of your website” – it can alert you when your website will experience an unexpected downtime at any given moment.

Thanks to the monitoring tools like UptimeRobot, you can immediately react to any technical issues that caused the site blackout and start fixing the problem right away.

Remember: When it comes to downtime vs. SEO, time is of the essence.

UptimeRobot can monitor your website 24 hours a day – no matter whether you are working, asleep, or on a vacation – it checks the website every 60 seconds to see whether it works properly or if there is some technical issue that you should know about:

Depending on your preferences, you can quickly get notified about any potential technical issue on your website via several possible channels and devices – whether it’s a simple email message, SMS, voice call, app, or even via Twitter notification:

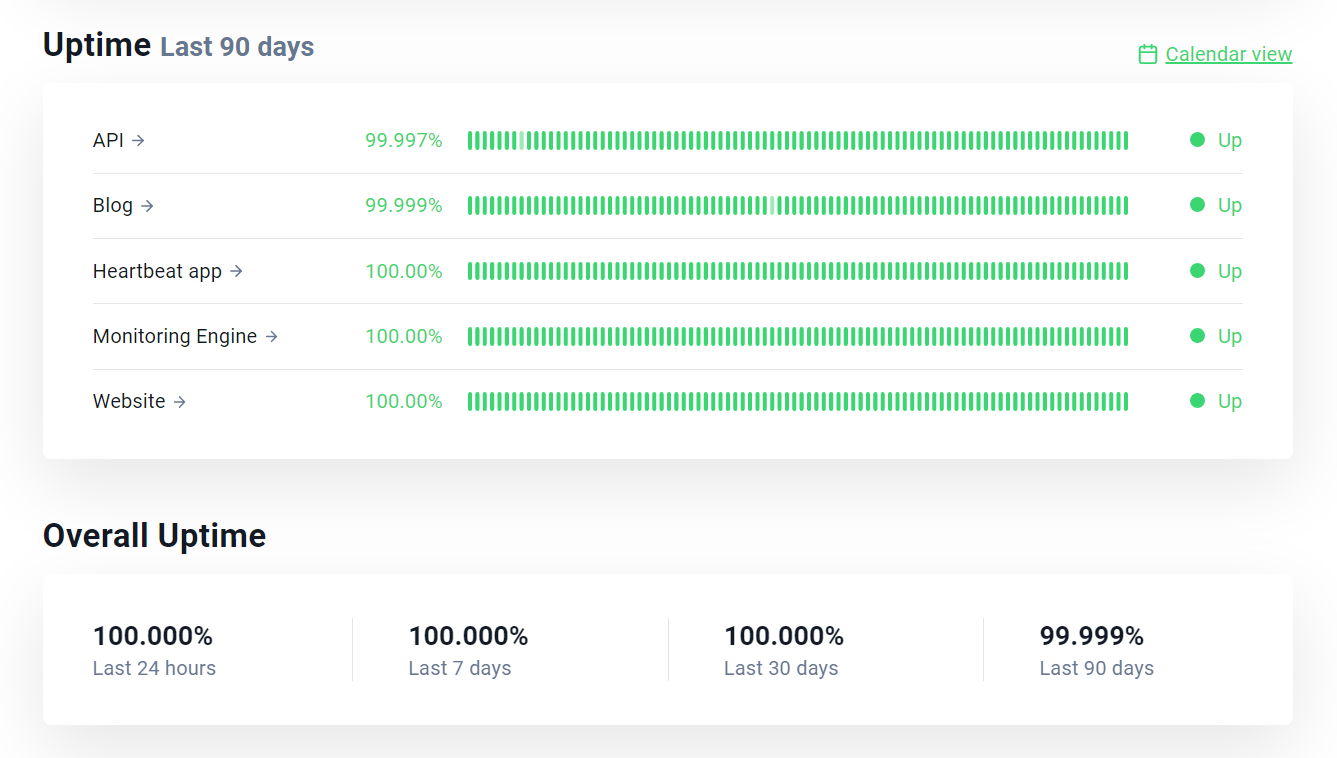

To better communicate with your website visitors (or regular customers), you can even set up a custom status page that can inform users about the unexpected outage or planned maintenance – this will help you to build up trust with your regular customers and let them know to stay tuned until your website goes back online!

Tip: Besides website monitoring, UptimeRobot also provides several interesting features, such as:

- SSL Certificate monitoring

- Keyword-based monitoring

- Cron job monitoring

- Port monitoring

- Ping monitoring

You can try UptimeRobot for free for 50 monitors with 5-minute checks (no credit card needed).

b) Backup your data

Having a backup service or plugin is an important protective aspect during the downtime period.

By backing up your data, you can quickly restore your website to the most recent (and stable) state and get your most important pages back online ASAP.

There are multiple ways how you can back up your website.

If you are using WordPress, you can back up your site and your database by following these official WordPress instructions.

In addition to that, you may consider using one of the popular backup plugins such as:

Plugins like these can help you to restore your website content and quickly get you back on track.

c) Use reliable hosting (with CDN)

Web hosting plays a crucial role in a website’s stability and reliability – if your hosting plan comes with, for example, limited bandwidth or visitor capacity, it might be just a matter of time until your website will experience its first outage.

Although things like loading speed or the maximum number of unique visitors are important features, you should definitely look out for a hosting service that also includes things like:

- Server caching

- Fast (and large) SSD storage

- SSL certificates

- NGINX

- Good technical support

- PHP 7.3, 7.4 or 8

You might not utilize all of these features, but you will definitely need some of them in order to prevent your website to crash.

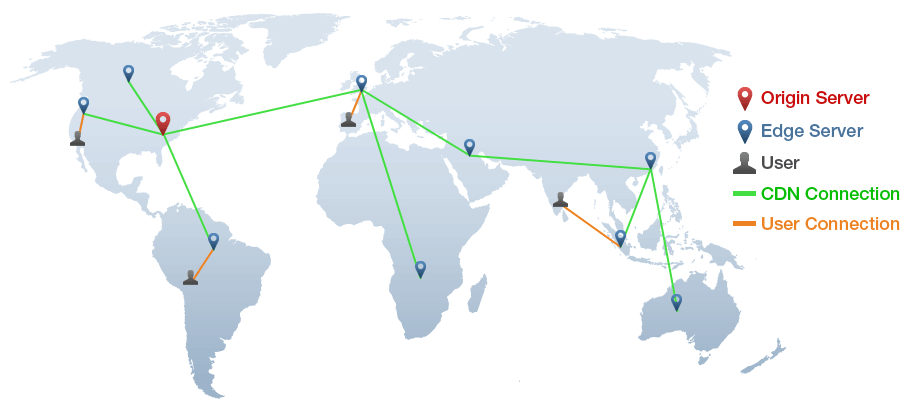

In addition to that, you should consider setting up a CDN (Content Delivery Network) – a network of physical servers in various locations in which your site can be cached and used during a downtime period.

Here’s how CDN works in a nutshell (by GTMetrix):

Whenever one of the primary servers goes down (causing a risk of an outage), another server in the CDN network can take over your website and deliver its content to users (and search engines) without any downtime period.

CDN also reduces the physical gap between your website and its visitors – which can significantly boost your overall site speed.

Tip: Although setting up a CDN may require some experience, it might be possible to implement this feature all by yourself.

If you would like to know more about how to implement CDN service for your website, check out this step-by-step guide on implementing Cloudflare CDN.

In case you are not really sure how to do it, you can simply ask an expert – at the end of the day, it is a one-time setup.

d) Improve websites security

Implementing protective measures on the website is a “must-have” feature that you should definitely consider – now more than ever.

Although there is never a 100 percent sure way of protecting your data from malicious attacks, the least you can do is implement some piece of protective software.

There are many decent plugins that you can use as site protection against malicious outages, viruses, or even DDoS attacks, such as:

- Jetpack Scan – an easy tool that performs automated scanning and provides fixes for any security threat.

- Wordfence – a popular WP plugin that provides security firewall and malware scans.

- WPScan – a free WordPress security plugin that performs security checks and notifies you about various possible website vulnerabilities.